1.3 pbrt: System Overview

pbrt is structured using standard object-oriented techniques: abstract base classes are defined for important entities (e.g., a Shape abstract base class defines the interface that all geometric shapes must implement, the Light abstract base class acts similarly for lights, etc.). The majority of the system is implemented purely in terms of the interfaces provided by these abstract base classes; for example, the code that checks for occluding objects between a light source and a point being shaded calls the Shape intersection methods and doesn’t need to consider the particular types of shapes that are present in the scene. This approach makes it easy to extend the system, as adding a new shape only requires implementing a class that implements the Shape interface and linking it into the system.

pbrt is written using a total of 10 key abstract base classes, summarized in Table 1.1. Adding a new implementation of one of these types to the system is straightforward; the implementation must inherit from the appropriate base class, be compiled and linked into the executable, and the object creation routines in Appendix B must be modified to create instances of the object as needed as the scene description file is parsed. Section A.4 discusses extending the system in this manner in more detail.

| Base class | Directory | Section |

|---|---|---|

| Shape | shapes/ | 3.1 |

| Aggregate | accelerators/ | 4.2 |

| Camera | cameras/ | 6.1 |

| Sampler | samplers/ | 7.2 |

| Filter | filters/ | 7.8 |

| Material | materials/ | 9.2 |

| Texture | textures/ | 10.3 |

| Medium | media/ | 11.3 |

| Light | lights/ | 12.2 |

| Integrator | integrators/ | 1.3.3 |

The pbrt source code distribution is available from pbrt.org. (A large collection of example scenes is also available as a separate download.) All of the code for the pbrt core is in the src/core directory, and the main() function is contained in the short file main/pbrt.cpp. Various implementations of instances of the abstract base classes are in separate directories: src/shapes has implementations of the Shape base class, src/materials has implementations of Material, and so forth.

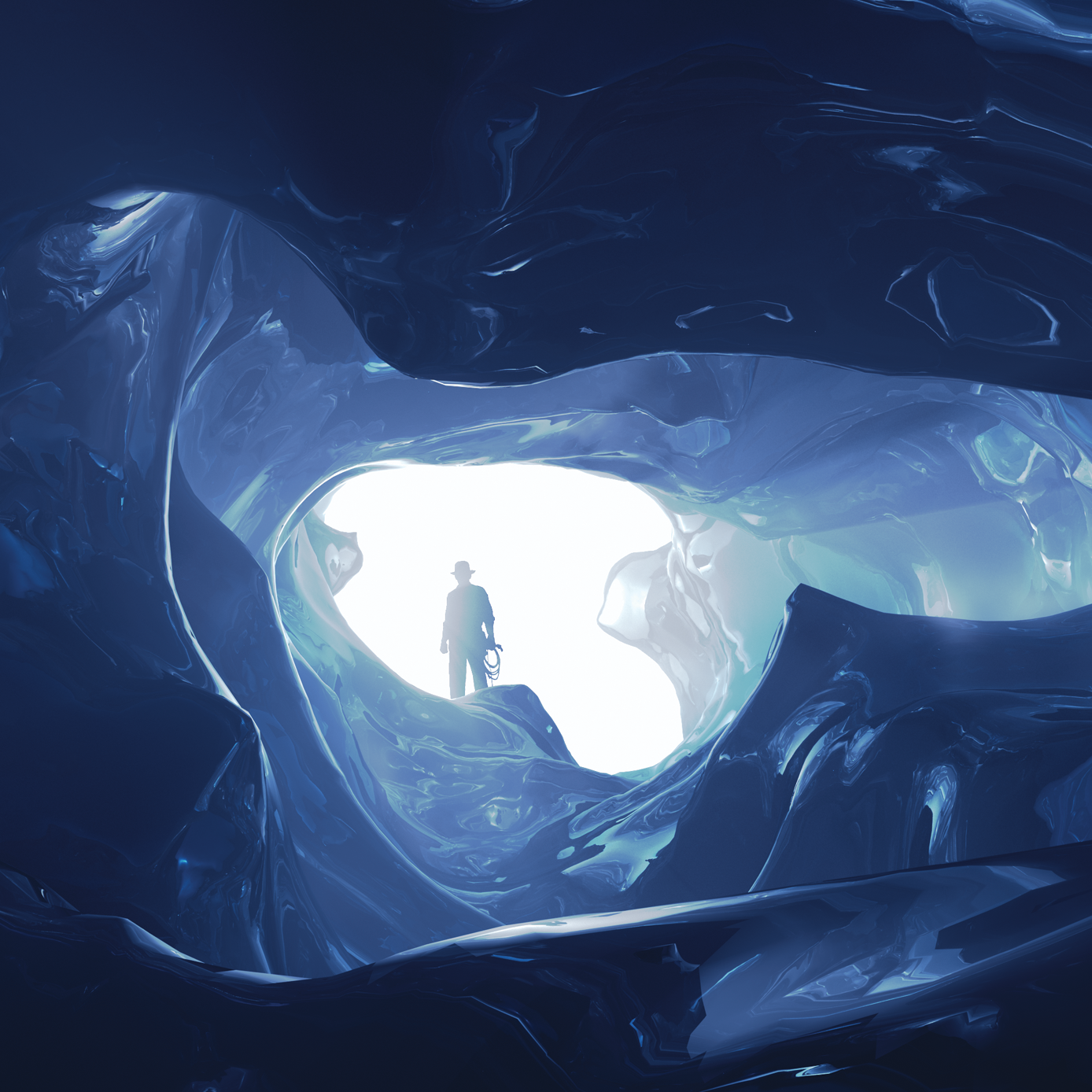

Throughout this section are a number of images rendered with extended versions of pbrt. Of them, Figures 1.11 through 1.14 are notable: not only are they visually impressive but also each of them was created by a student in a rendering course where the final class project was to extend pbrt with new functionality in order to render an interesting image. These images are among the best from those courses.

1.3.1 Phases of Execution

pbrt can be conceptually divided into two phases of execution. First, it parses the scene description file provided by the user. The scene description is a text file that specifies the geometric shapes that make up the scene, their material properties, the lights that illuminate them, where the virtual camera is positioned in the scene, and parameters to all of the individual algorithms used throughout the system. Each statement in the input file has a direct mapping to one of the routines in Appendix A; these routines comprise the procedural interface for describing a scene. The scene file format is documented on the pbrt Web site, pbrt.org.

The end results of the parsing phase are an instance of the Scene class and an instance of the Integrator class. The Scene contains a representation of the contents of the scene (geometric objects, lights, etc.), and the Integrator implements an algorithm to render it. The integrator is so-named because its main task is to evaluate the integral from Equation (1.1).

Once the scene has been specified, the second phase of execution begins, and the main rendering loop executes. This phase is where pbrt usually spends the majority of its running time, and most of this book describes code that executes during this phase. The rendering loop is performed by executing an implementation of the Integrator::Render() method, which is the focus of Section 1.3.4.

This chapter will describe a particular Integrator subclass named SamplerIntegrator, whose Render() method determines the light arriving at a virtual film plane for a large number of rays that model the process of image formation. After the contributions of all of these film samples have been computed, the final image is written to a file. The scene description data in memory are deallocated, and the system then resumes processing statements from the scene description file until no more remain, allowing the user to specify another scene to be rendered, if desired.

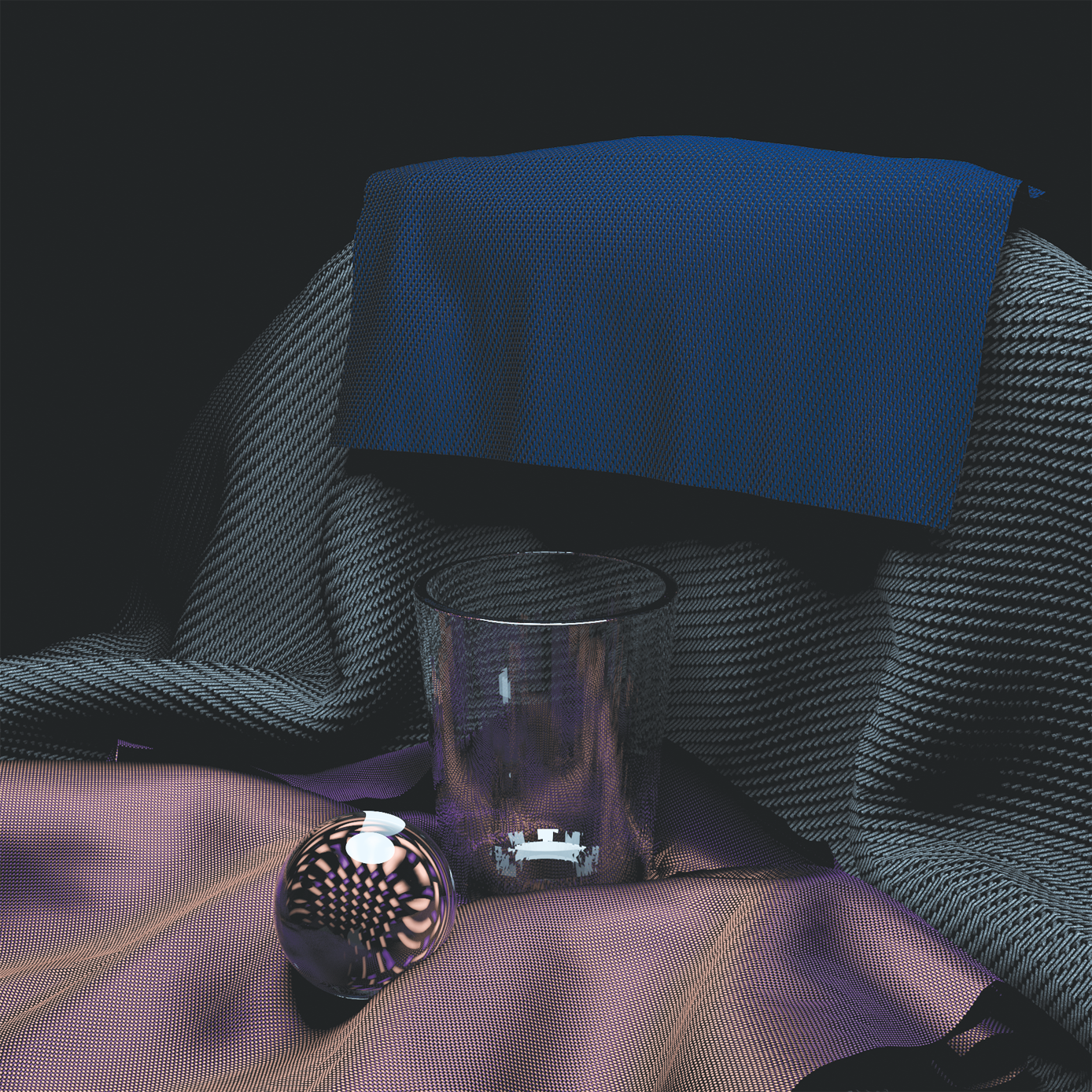

Figures 1.15 and 1.16 were rendered with LuxRender, a GPL-licensed rendering system originally based on the pbrt source code from the first edition of the book. (See www.luxrender.net for more information about LuxRender.)

1.3.2 Scene Representation

pbrt’s main() function can be found in the file main/pbrt.cpp. This function is quite simple; it first loops over the provided command-line arguments in argv, initializing values in the Options structure and storing the filenames provided in the arguments. Running pbrt with –help as a command-line argument prints all of the options that can be specified on the command line. The fragment that parses the command-line arguments, <<Process command-line arguments>>, is straightforward and therefore not included in the book here.

The options structure is then passed to the pbrtInit() function, which does systemwide initialization. The main() function then parses the given scene description(s), leading to the creation of a Scene and an Integrator. After all rendering is done, pbrtCleanup() does final cleanup before the system exits.

The pbrtInit() and pbrtCleanup() functions appear in a mini-index in the page margin, along with the number of the page where they are actually defined. The mini-indices have pointers to the definitions of almost all of the functions, classes, methods, and member variables used or referred to on each page.

If pbrt is run with no input filenames provided, then the scene description is read from standard input. Otherwise it loops through the provided filenames, processing each file in turn.

The ParseFile() function parses a scene description file, either from standard input or from a file on disk; it returns false if it was unable to open the file. The mechanics of parsing scene description files will not be described in this book; the parser implementation can be found in the lex and yacc files core/pbrtlex.ll and core/pbrtparse.y, respectively. Readers who want to understand the parsing subsystem but are not familiar with these tools may wish to consult Levine, Mason, and Brown (1992).

We use the common UNIX idiom that a file named “-” represents standard input:

If a particular input file can’t be opened, the Error() routine reports this information to the user. Error() uses the same format string semantics as printf().

As the scene file is parsed, objects are created that represent the lights and geometric primitives in the scene. These are all stored in the Scene object, which is created by the RenderOptions::MakeScene() method in Section A.3.7 in Appendix B. The Scene class is declared in core/scene.h and defined in core/scene.cpp.

Each light source in the scene is represented by a Light object, which specifies the shape of a light and the distribution of energy that it emits. The Scene stores all of the lights using a vector of shared_ptr instances from the C++ standard library. pbrt uses shared pointers to track how many times objects are referenced by other instances. When the last instance holding a reference (the Scene in this case) is destroyed, the reference count reaches zero and the Light can be safely freed, which happens automatically at that point.

While some renderers support separate light lists per geometric object, allowing a light to illuminate only some of the objects in the scene, this idea does not map well to the physically based rendering approach taken in pbrt, so pbrt only supports a single global per-scene list. Many parts of the system need access to the lights, so the Scene makes them available as a public member variable.

Each geometric object in the scene is represented by a Primitive, which combines two objects: a Shape that specifies its geometry, and a Material that describes its appearance (e.g., the object’s color, whether it has a dull or glossy finish). All of the geometric primitives are collected into a single aggregate Primitive in the Scene member variable Scene::aggregate. This aggregate is a special kind of primitive that itself holds references to many other primitives. Because it implements the Primitive interface it appears no different from a single primitive to the rest of the system. The aggregate implementation stores all the scene’s primitives in an acceleration data structure that reduces the number of unnecessary ray intersection tests with primitives that are far away from a given ray.

The constructor caches the bounding box of the scene geometry in the worldBound member variable.

The bound is made available via the WorldBound() method.

Some Light implementations find it useful to do some additional initialization after the scene has been defined but before rendering begins. The Scene constructor calls their Preprocess() methods to allow them to do so.

The Scene class provides two methods related to ray–primitive intersection. Its Intersect() method traces the given ray into the scene and returns a Boolean value indicating whether the ray intersected any of the primitives. If so, it fills in the provided SurfaceInteraction structure with information about the closest intersection point along the ray. The SurfaceInteraction structure is defined in Section 4.1.

A closely related method is Scene::IntersectP(), which checks for the existence of intersections along the ray but does not return any information about those intersections. Because this routine doesn’t need to search for the closest intersection or compute any additional information about intersections, it is generally more efficient than Scene::Intersect(). This routine is used for shadow rays.

1.3.3 Integrator Interface and SamplerIntegrator

Rendering an image of the scene is handled by an instance of a class that implements the Integrator interface. Integrator is an abstract base class that defines the Render() method that must be provided by all integrators. In this section, we will define one Integrator implementation, the SamplerIntegrator. The basic integrator interfaces are defined in core/integrator.h, and some utility functions used by integrators are in core/integrator.cpp. The implementations of the various integrators are in the integrators directory.

The method that Integrators must provide is Render(); it is passed a reference to the Scene to use to compute an image of the scene or more generally, a set of measurements of the scene lighting. This interface is intentionally kept very general to permit a wide range of implementations—for example, one could implement an Integrator that takes measurements only at a sparse set of positions distributed through the scene rather than generating a regular 2D image.

In this chapter, we’ll focus on SamplerIntegrator, which is an Integrator subclass, and the WhittedIntegrator, which implements the SamplerIntegrator interface. (Implementations of other SamplerIntegrators will be introduced in Chapters 14 and 15; the integrators in Chapter 16 inherit directly from Integrator.) The name of the SamplerIntegrator derives from the fact that its rendering process is driven by a stream of samples from a Sampler; each such sample identifies a point on the image at which the integrator should compute the arriving light to form the image.

The SamplerIntegrator stores a pointer to a Sampler. The role of the sampler is subtle, but its implementation can substantially affect the quality of the images that the system generates. First, the sampler is responsible for choosing the points on the image plane from which rays are traced. Second, it is responsible for supplying the sample positions used by integrators for estimating the value of the light transport integral, Equation (1.1). For example, some integrators need to choose random points on light sources to compute illumination from area lights. Generating a good distribution of these samples is an important part of the rendering process that can substantially affect overall efficiency; this topic is the main focus of Chapter 7.

The Camera object controls the viewing and lens parameters such as position, orientation, focus, and field of view. A Film member variable inside the Camera class handles image storage. The Camera classes are described in Chapter 6, and Film is described in Section 7.9. The Film is responsible for writing the final image to a file and possibly displaying it on the screen as it is being computed.

The SamplerIntegrator constructor stores pointers to these objects in member variables. The SamplerIntegrator is created in the RenderOptions::MakeIntegrator() method, which is in turn called by pbrtWorldEnd(), which is called by the input file parser when it is done parsing a scene description from an input file and is ready to render the scene.

SamplerIntegrator implementations may optionally implement the Preprocess() method. It is called after the Scene has been fully initialized and gives the integrator a chance to do scene-dependent computation, such as allocating additional data structures that are dependent on the number of lights in the scene, or precomputing a rough representation of the distribution of radiance in the scene. Implementations that don’t need to do anything along these lines can leave this method unimplemented.

1.3.4 The Main Rendering Loop

After the Scene and the Integrator have been allocated and initialized, the Integrator::Render() method is invoked, starting the second phase of pbrt’s execution: the main rendering loop. In the SamplerIntegrator’s implementation of this method, at each of a series of positions on the image plane, the method uses the Camera and the Sampler to generate a ray into the scene and then uses the Li() method to determine the amount of light arriving at the image plane along that ray. This value is passed to the Film, which records the light’s contribution. Figure 1.17 summarizes the main classes used in this method and the flow of data among them.

So that rendering can proceed in parallel on systems with multiple processing cores, the image is decomposed into small tiles of pixels. Each tile can be processed independently and in parallel. The ParallelFor() function, which is described in more detail in Section A.6, implements a parallel for loop, where multiple iterations may run in parallel. A C++ lambda expression provides the loop body. Here, a variant of ParallelFor() that loops over a 2D domain is used to iterate over the image tiles.

There are two factors to trade off in deciding how large to make the image tiles: load-balancing and per-tile overhead. On one hand, we’d like to have significantly more tiles than there are processors in the system: consider a four-core computer with only four tiles. In general, it’s likely that some of the tiles will take less processing time than others; the ones that are responsible for parts of the image where the scene is relatively simple will usually take less processing time than parts of the image where the scene is relatively complex. Therefore, if the number of tiles was equal to the number of processors, some processors would finish before others and sit idle while waiting for the processor that had the longest running tile. Figure 1.18 illustrates this issue; it shows the distribution of execution time for the tiles used to render the shiny sphere scene in Figure 1.7. The longest running one took 151 times longer than the shortest one.

On the other hand, having tiles that are too small is also inefficient. There is a small fixed overhead for a processing core to determine which loop iteration it should run next; the more tiles there are, the more times this overhead must be paid.

For simplicity, pbrt always uses tiles; this granularity works well for almost all images, except for very low-resolution ones. We implicitly assume that the small image case isn’t particularly important to render at maximum efficiency. The Film’s GetSampleBounds() method returns the extent of pixels over which samples must be generated for the image being rendered. The addition of tileSize - 1 in the computation of nTiles results in a number of tiles that is rounded to the next higher integer when the sample bounds along an axis are not exactly divisible by . This means that the lambda function invoked by ParallelFor() must be able to deal with partial tiles containing some unused pixels.

When the parallel for loop implementation that is defined in Appendix A.6.4 decides to run a loop iteration on a particular processor, the lambda will be called with the tile’s coordinates. It starts by doing a little bit of setup work, determining which part of the film plane it is responsible for and allocating space for some temporary data before using the Sampler to generate image samples, the Camera to determine corresponding rays leaving the film plane, and the Li() method to compute radiance along those rays arriving at the film.

Implementations of the Li() method will generally need to temporarily allocate small amounts of memory for each radiance computation. The large number of resulting allocations can easily overwhelm the system’s regular memory allocation routines (e.g., malloc() or new), which must maintain and synchronize elaborate internal data structures to track sets of free memory regions among processors. A naive implementation could potentially spend a fairly large fraction of its computation time in the memory allocator.

To address this issue, we will pass an instance of the MemoryArena class to the Li() method. MemoryArena instances manage pools of memory to enable higher performance allocation than what is possible with the standard library routines.

The arena’s memory pool is always released in its entirety, which removes the need for complex internal data structures. Instances of this class can only be used by a single thread—concurrent access without additional synchronization is not permitted. We create a unique MemoryArena for each loop iteration that can be used directly, which also ensures that the arena is only accessed by a single thread.

Most Sampler implementations find it useful to maintain some state, such as the coordinates of the current pixel being sampled. This means that multiple processing threads cannot use a single Sampler concurrently. Therefore, Samplers provide a Clone() method to create a new instance of a given Sampler; it takes a seed that is used by some implementations to seed a pseudo-random number generator so that the same sequence of pseudo-random numbers isn’t generated in every tile. (Note that not all Samplers use pseudo-random numbers; those that don’t just ignore the seed.)

Next, the extent of pixels to be sampled in this loop iteration is computed based on the tile indices. Two issues must be accounted for in this computation: first, the overall pixel bounds to be sampled may not be equal to the full image resolution. For example, the user may have specified a “crop window” of just a subset of pixels to sample. Second, if the image resolution isn’t an exact multiple of 16, then the tiles on the right and bottom images won’t be a full .

Finally, a FilmTile is acquired from the Film. This class provides a small buffer of memory to store pixel values for the current tile. Its storage is private to the loop iteration, so pixel values can be updated without worrying about other threads concurrently modifying the same pixels. The tile is merged into the film’s storage once the work for rendering it is done; serializing concurrent updates to the image is handled then.

Rendering can now proceed. The implementation loops over all of the pixels in the tile using a range-based for loop that automatically uses iterators provided by the Bounds2 class. The cloned Sampler is notified that it should start generating samples for the current pixel, and samples are processed in turn until StartNextSample() returns false. (As we’ll see in Chapter 7, taking multiple samples per pixel can greatly improve final image quality.)

The CameraSample structure records the position on the film for which the camera should generate the corresponding ray. It also stores time and lens position sample values, which are used when rendering scenes with moving objects and for camera models that simulate non-pinhole apertures, respectively.

The Camera interface provides two methods to generate rays: Camera::GenerateRay(), which returns the ray for a given image sample position, and Camera::GenerateRayDifferential(), which returns a ray differential, which incorporates information about the rays that the Camera would generate for samples that are one pixel away on the image plane in both the and directions. Ray differentials are used to get better results from some of the texture functions defined in Chapter 10, making it possible to compute how quickly a texture varies with respect to the pixel spacing, a key component of texture antialiasing.

After the ray differential has been returned, the ScaleDifferentials() method scales the differential rays to account for the actual spacing between samples on the film plane for the case where multiple samples are taken per pixel.

The camera also returns a floating-point weight associated with the ray. For simple camera models, each ray is weighted equally, but camera models that more accurately model the process of image formation by lens systems may generate some rays that contribute more than others. Such a camera model might simulate the effect of less light arriving at the edges of the film plane than at the center, an effect called vignetting. The returned weight will be used later to scale the ray’s contribution to the image.

Note the capitalized floating-point type Float: depending on the compilation flags of pbrt, this is an alias for either float or double. More detail on this design choice is provided in Section A.1.

Given a ray, the next task is to determine the radiance arriving at the image plane along that ray. The Li() method takes care of this task.

Li() is a pure virtual method that returns the incident radiance at the origin of a given ray; each subclass of SamplerIntegrator must provide an implementation of this method. The parameters to Li() are the following:

- ray: the ray along which the incident radiance should be evaluated.

- scene: the Scene being rendered. The implementation will query the scene for information about the lights and geometry, and so on.

- sampler: a sample generator used to solve the light transport equation via Monte Carlo integration.

- arena: a MemoryArena for efficient temporary memory allocation by the integrator. The integrator should assume that any memory it allocates with the arena will be freed shortly after the Li() method returns and thus should not use the arena to allocate any memory that must persist for longer than is needed for the current ray.

- depth: the number of ray bounces from the camera that have occurred up until the current call to Li().

The method returns a Spectrum that represents the incident radiance at the origin of the ray:

A common side effect of bugs in the rendering process is that impossible radiance values are computed. For example, division by zero results in radiance values equal either to the IEEE floating-point infinity or “not a number” value. The renderer looks for this possibility, as well as for spectra with negative contributions, and prints an error message when it encounters them. Here we won’t include the fragment that does this, <<Issue warning if unexpected radiance value is returned>>. See the implementation in core/integrator.cpp if you’re interested in its details.

After the radiance arriving at the ray’s origin is known, the image can be updated: the FilmTile::AddSample() method updates the pixels in the tile’s image given the results from a sample. The details of how sample values are recorded in the film are explained in Sections 7.8 and 7.9.

After processing a sample, all of the allocated memory in the MemoryArena is freed together when MemoryArena::Reset() is called. (See Section 9.1.1 for an explanation of how the MemoryArena is used to allocate memory to represent BSDFs at intersection points.)

Once radiance values for all of the samples in a tile have been computed, the FilmTile is handed off to the Film’s MergeFilmTile() method, which handles adding the tile’s pixel contributions to the final image. Note that the std::move() function is used to transfer ownership of the unique_ptr to MergeFilmTile().

After all of the loop iterations have finished, the SamplerIntegrator’s Render() method calls the Film’s WriteImage() method to write the image out to a file.

1.3.5 An Integrator for Whitted Ray Tracing

Chapters 14 and 15 include the implementations of many different integrators, based on a variety of algorithms with differing levels of accuracy. Here we will present an integrator based on Whitted’s ray-tracing algorithm. This integrator accurately computes reflected and transmitted light from specular surfaces like glass, mirrors, and water, although it doesn’t account for other types of indirect lighting effects like light bouncing off a wall and illuminating a room. The WhittedIntegrator class can be found in the integrators/whitted.h and integrators/whitted.cpp files in the pbrt distribution.

The Whitted integrator works by recursively evaluating radiance along reflected and refracted ray directions. It stops the recursion at a predetermined maximum depth, WhittedIntegrator::maxDepth. By default, the maximum recursion depth is five. Without this termination criterion, the recursion might never terminate (imagine, e.g., a hall-of-mirrors scene). This member variable is initialized in the WhittedIntegrator constructor based on parameters set in the scene description file.

As a SamplerIntegrator implementation, the WhittedIntegrator must provide an implementation of the Li() method, which returns the radiance arriving at the origin of the given ray. Figure 1.19 summarizes the data flow among the main classes used during integration at surfaces.

The first step is to find the first intersection of the ray with the shapes in the scene. The Scene::Intersect() method takes a ray and returns a Boolean value indicating whether it intersected a shape. For rays where an intersection was found, it initializes the provided SurfaceInteraction with geometric information about the intersection.

If no intersection was found, radiance may be carried along the ray due to light sources that don’t have associated geometry. One example of such a light is the InfiniteAreaLight, which can represent illumination from the sky. The Light::Le() method allows such lights to return their radiance along a given ray.

Otherwise a valid intersection has been found. The integrator must determine how light is scattered by the surface of the shape at the intersection point, determine how much illumination is arriving from light sources at the point, and apply an approximation to Equation (1.1) to compute how much light is leaving the surface in the viewing direction. Because this integrator ignores the effect of participating media like smoke or fog, the radiance leaving the intersection point is the same as the radiance arriving at the ray’s origin.

Figure 1.20 shows a few quantities that will be used frequently in the fragments to come. n is the surface normal at the intersection point and the normalized direction from the hit point back to the ray origin is stored in wo; Cameras are responsible for normalizing the direction component of generated rays, so there’s no need to renormalize it here. Normalized directions are denoted by the symbol in this book, and in pbrt’s code we will use wo to represent , the outgoing direction of scattered light.

If an intersection was found, it’s necessary to determine how the surface’s material scatters light. The ComputeScatteringFunctions() method handles this task, evaluating texture functions to determine surface properties and then initializing a representation of the BSDF (and possibly BSSRDF) at the point. This method generally needs to allocate memory for the objects that constitute this representation; because this memory only needs to be available for the current ray, the MemoryArena is provided for it to use for its allocations.

In case the ray happened to hit geometry that is emissive (such as an area light source), the integrator accounts for any emitted radiance by calling the SurfaceInteraction::Le() method. This gives the first term of the light transport equation, Equation (1.1). If the object is not emissive, this method returns a black spectrum.

For each light, the integrator calls the Light::Sample_Li() method to compute the radiance from that light falling on the surface at the point being shaded. This method also returns the direction vector from the point being shaded to the light source, which is stored in the variable wi (denoting an incident direction ).

The spectrum returned by this method does not account for the possibility that some other shape may block light from the light and prevent it from reaching the point being shaded. Instead, it returns a VisibilityTester object that can be used to determine if any primitives block the surface point from the light source. This test is done by tracing a shadow ray between the point being shaded and the light to verify that the path is clear. pbrt’s code is organized in this way so that it can avoid tracing the shadow ray unless necessary: this way it can first make sure that the light falling on the surface would be scattered in the direction if the light isn’t blocked. For example, if the surface is not transmissive, then light arriving at the back side of the surface doesn’t contribute to reflection.

The Sample_Li() method also returns the probability density for the light to have sampled the direction wi in the pdf variable. This value is used for Monte Carlo integration with complex area light sources where light is arriving at the point from many directions even though just one direction is sampled here; for simple lights like point lights, the value of pdf is one. The details of how this probability density is computed and used in rendering is the topic of Chapters 13 and 14; in the end, the light’s contribution must be divided by pdf, so this is done by the implementation here.

If the arriving radiance is nonzero and the BSDF indicates that some of the incident light from the direction is in fact scattered to the outgoing direction , then the integrator multiplies the radiance value by the value of the BSDF and the cosine term. The cosine term is computed using the AbsDot() function, which returns the absolute value of the dot product between two vectors. If the vectors are normalized, as both wi and n are here, this is equal to the absolute value of the cosine of the angle between them (Section 2.2.1).

This product represents the light’s contribution to the light transport equation integral, Equation (1.1), and it is added to the total reflected radiance . After all lights have been considered, the integrator has computed the total contribution of direct lighting—light that arrives at the surface directly from emissive objects (as opposed to light that has reflected off other objects in the scene before arriving at the point).

This integrator also handles light scattered by perfectly specular surfaces like mirrors or glass. It is fairly simple to use properties of mirrors to find the reflected directions (Figure 1.21) and to use Snell’s law to find the transmitted directions (Section 8.2). The integrator can then recursively follow the appropriate ray in the new direction and add its contribution to the reflected radiance at the point originally seen from the camera. The computation of the effect of specular reflection and transmission is handled in separate utility methods so these functions can easily be reused by other SamplerIntegrator implementations.

In the SpecularReflect() and SpecularTransmit() methods, the BSDF::Sample_f() method returns an incident ray direction for a given outgoing direction and a given mode of light scattering. This method is one of the foundations of the Monte Carlo light transport algorithms that will be the subject of the last few chapters of this book. Here, we will use it to find only outgoing directions corresponding to perfect specular reflection or refraction, using flags to indicate to BSDF::Sample_f() that other types of reflection should be ignored. Although BSDF::Sample_f() can sample random directions leaving the surface for probabilistic integration algorithms, the randomness is constrained to be consistent with the BSDF’s scattering properties. In the case of perfect specular reflection or refraction, only one direction is possible, so there is no randomness at all.

The calls to BSDF::Sample_f() in these functions initialize wi with the chosen direction and return the BSDF’s value for the directions . If the value of the BSDF is nonzero, the integrator uses the SamplerIntegrator::Li() method to get the incoming radiance along , which in this case will in turn resolve to the WhittedIntegrator::Li() method.

In order to use ray differentials to antialias textures that are seen in reflections or refractions, it is necessary to know how reflection and transmission affect the screen-space footprint of rays. The fragments that compute the ray differentials for these rays are defined later, in Section 10.1.3. Given the fully initialized ray differential, a recursive call to Li() provides incident radiance, which is scaled by the value of the BSDF, the cosine term, and divided by the PDF, as per Equation (1.1).

The SpecularTransmit() method is essentially the same as SpecularReflect() but just requests the BSDF_TRANSMISSION specular component of the BSDF, if any, rather than the BSDF_REFLECTION component used by SpecularReflect(). We therefore won’t include its implementation in the text of the book here.